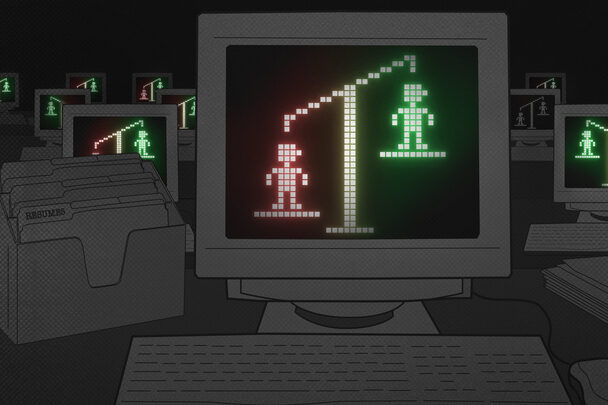

For those excited — or concerned — about how AI could reshape society, Colorado is a place to watch, as the first state in the country to roll out comprehensive regulations on the use of artificial intelligence systems in companies’ decision-making.

The new law won’t go into effect until 2026 — and a state task force will be working on updates to it in the meantime — but backers say the goal is to protect the public from any potential bias or discrimination embedded in AI systems, and to set guardrails to make sure the technology is used ethically as companies continue to incorporate it and expand its role in their decision making.

“Whether (people) get insurance, or what the rate for their insurance is, or legal decisions or employment decisions, whether you get fired or hired, could be up to an AI algorithm,” warns Democratic state Rep. Brianna Titone, one of the bill’s main sponsors.

Colorado’s new law requires companies to inform people when an AI system is being used, and, if someone thinks the technology has treated them unfairly, allows them to correct some of the input data or file a complaint. It won’t allow an individual to sue over AI use, but sets up a process to look into potential consequences for bad actors.

“If you were fired by an AI process and you say, ‘well, this is impossible, there's no way I should be fired by this,’ you can find a resolution through the Attorney General's office to say, ‘we need someone to intervene and to double check that this process actually didn't discriminate and have a bias against that person,’” explained Titone.

The law aims to shine a light on AI-powered decision-making

The law only covers AI technology when it’s involved in consequential decisions. Things like AI-enabled video games, anti-fraud technology, malware and data storage are outside of its scope. The law applies to specific industries: education enrollment, employment, financial and lending services, essential government services, health care, housing, insurance and legal services.

For Democratic Rep. Manny Rutinel, another main sponsor, one key feature is the requirement that developers, and the companies that use their systems, analyze AI algorithms to figure out where different types of discrimination may be occurring. Developers must disclose information such as the data used to train the system, possible biases and any harmful or inappropriate uses of the system.

The idea, Rutinel said, is “to be able to prevent the problem from happening before it happens… We still have a lot to do, but I think this is a great first step, a really significant and robust first step to make sure that technology works for everyone, not just a privileged few.”

While ChatGPT and generative AI put the technology on the general public’s radar, Matt Scherer, an attorney at the Center for Democracy and Technology, said companies have already been using various automatic systems, not even referred to as AI, to make employment decisions for at least the last eight years.

“We really have so little insight into how companies are using AI to decide who gets jobs, who gets promotions, who gets access to an apartment or a mortgage or a house or healthcare. And that is a situation that just isn't sustainable because, again, these decisions… make major impacts on people's lives,” he said.

Scherer believes companies have made dramatically overblown claims about how accurate, fair and unbiased these systems are in making decisions. He’s concerned the new law doesn’t allow an individual to sue for damages.

“There's definitely a lot of worries among labor unions and civil society organizations that this bill just doesn't have enough teeth to really force companies to change their practices,” said Scherer.

He said the ultimate impact may be determined by the courts, if various aspects of the law are challenged. Still, he sees it as a sorely-needed foundation to create transparency “rather than the wild Wild West that's existed out there so far.”

Gov. Polis' warning to lawmakers

When Democratic Governor Jared Polis signed SB24-205, he told lawmakers he did so with reservations, writing, “I am concerned about the impact this law may have on an industry that is fueling critical technological advancements across our state for consumers and enterprises alike.”

He said it would be better for the federal government to create a cohesive, national approach so there’s a level playing field between states, and to prevent companies from having to deal with an undue compliance burden.

However, Polis said he hopes Colorado’s law furthers an important and overdue conversation, and he asked the sponsors to keep working to refine and amend the policy before it goes into effect. To that end, Colorado has created a 26-member AI impact task force that will come up with recommendations by next February for Polis and the legislature.

Polis criticized the bill in particular for not just focusing on preventing intentionally discriminatory conduct but for going further to “regulate the results of AI system use, regardless of intent.”

“I want to be clear in my goal of ensuring Colorado remains home to innovative technologies and our consumers are able to fully access important AI-based products,” wrote the governor.

The Colorado law is based on a similar proposal the Connecticut legislature considered, but didn’t pass, earlier this year. Other places have instituted narrower policies; for instance New York City requires employers using AI technologies to conduct independent “bias audits” on some of the tools and share the results publicly.

“The states are clearly looking at each other to see how they can put their own stamp on the regulation,” said Helena Almeida, vice president and managing council of ADP, which develops AI systems for HR applications, including payroll. The company’s clients include many members of the Fortune 500.

“It's definitely going to have an impact on all employers and deployers of AI systems,” said Almeida of Colorado's AI law.

“This is, for us, an additional regulation that we will have in mind, but it's something that we've been thinking about for a long time and that we're really laser-focused on in terms of all of our AI use cases,” she added.

A work in progress

Colorado’s effort at regulation illustrates the deep tension in the field right now, between improving AI’s usefulness for businesses and ensuring its fairness and reliability for the people affected by it, according to Michael Brent, director of the responsible AI team at Boston Consulting Group, which helps companies design, build and deploy AI systems.

“Companies have a desire to build faster, cheaper, more accurate, more reliable, less environmentally damaging machine learning systems that solve business problems,” Brent explained.

Brent’s job is to identify ways in which AI systems might inadvertently harm communities and then mitigate that likelihood. He thinks Colorado’s law will increase transparency about what companies are doing and give the public more awareness about how they want to interact with a given AI system.

“What kind of data do they think they want to share with it? They can get into that space where they're having that moment of critical reflection, and they can simply say to themselves, ‘you know what? I actually don't want a machine learning system to be processing my data in this conversation. I would prefer to opt out by closing that window or calling a human being if I can,’” said Brent.

For all the legislature’s focus on creating comprehensive regulations, Democratic Rep. Titone said policymakers are very much the beginning of figuring it out with the tech industry,

“This is something that we have to do together and we have to be able to communicate and understand what these issues are and how they can be abused and misused and have these unintended consequences, and work together,” said Titone.